🛡️ Edge-Native Enterprise: How Nexarq.ai Built on the Cloudflare Developer Platform

Introduction: The challenge of modern scale

Building a successful web platform today means delivering content globally at lightning speed while running sophisticated backend logic—all securely. At Nexarq.ai, we faced this challenge for our public-facing AI application and our internal operational tools. We chose the Cloudflare Developer Platform as our unified operating system. By leaning on Workers, Workers AI, D1, R2, Vectorize, and KV, we deployed everything—from static marketing assets to a secure internal CRM—to the global edge. This article details the reference architecture we adopted and demonstrates how Cloudflare pairs performance with a cohesive developer experience.

Section 1: Nexarq.ai — static, dynamic, and AI at the edge

The public Nexarq.ai website requires extreme low-latency for both the initial page load (static content) and the conversational AI functionality (dynamic compute). We rely on Workers to orchestrate authentication, AI inference, and storage from the same entrypoint.

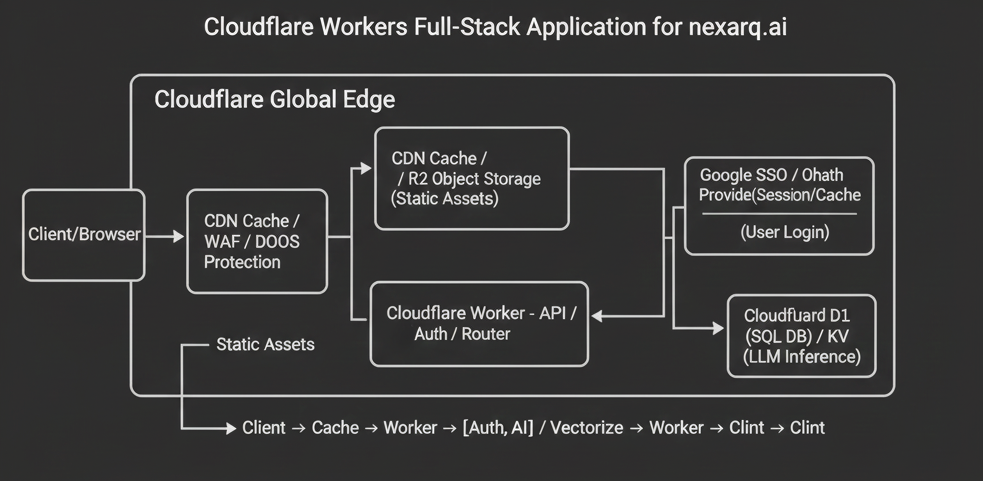

Public stack overview – Cloudflare Pages serves static assets from R2, while a Cloudflare Worker routes traffic through CDN cache, authentication, Workers AI, and the data tier so everything stays close to the user.

Architecture Diagram 1 – Nexarq.ai public stack across CDN cache, Workers, Workers AI, D1/KV, and R2.

Elaboration: public site components

- Static content (F & B) – All public assets (HTML, CSS, images) live in Cloudflare R2 and deploy via Cloudflare Pages. The CDN cache guarantees sub-second initial loads and an excellent UX.

- Authentication & dynamic functions (C) – A Cloudflare Worker acts as the router/API layer, coordinating OAuth redirects, session validation, and API orchestration.

- Google SSO / OAuth (G) – The Worker drives the auth handshake with Google, validating tokens and writing the resulting session ID to Workers KV for instant lookup.

- Backend logic – The same Worker endpoint fans out to data services, AI inference, and caching layers to keep origins minimal.

- AI engine (D) – Workers AI powers the LLM-infused chat UX, while Vectorize handles RAG-style context retrieval co-located with the user.

- Data persistence (E) – Cloudflare D1 stores structured user and application data; Workers KV keeps ephemeral session state and configuration.

Section 2: Secure internal sites — CRM and operational tools

For internal tools like our CRM, security is paramount. Instead of building custom auth middleware, we wrap every request in Cloudflare’s Zero Trust model.

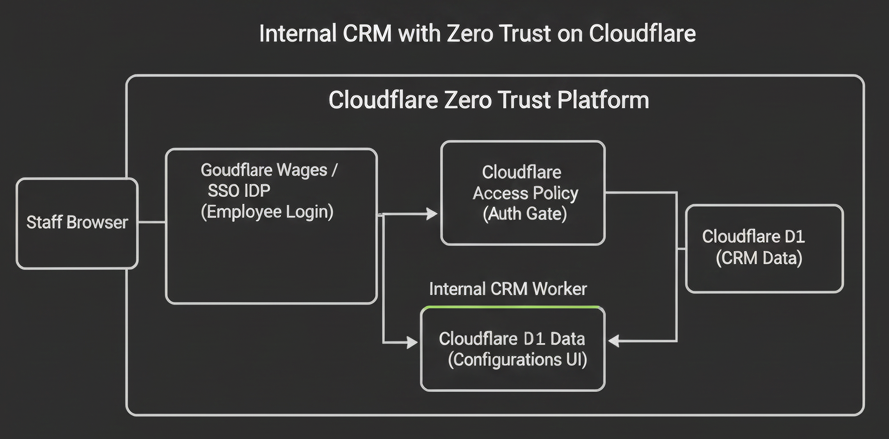

Internal Zero Trust overview – Staff traffic first hits Cloudflare Access (Zero Trust), delegates auth to Google Workspace, and only then reaches the CRM Worker, which talks to D1, KV, and Pages assets.

Architecture Diagram 2 – Internal CRM stack with Cloudflare Access, Google Workspace, Workers, D1, KV, and Pages.

Elaboration: internal CRM components

- Zero Trust gate (B — Cloudflare Access) – Every staff request hits an Access policy that validates Google Workspace group membership before the Worker ever sees the payload.

- Worker is protected – The internal CRM Worker receives only authenticated traffic, allowing the app to focus on business logic instead of auth plumbing.

- Worker compute (C) – Identical runtime to the public site, so we reuse libraries, Wrangler workflows, and deployment strategies.

- Data persistence (D & E) – D1 stores structured CRM data; Workers KV hosts feature flags or UI preferences referenced on each request.

- Google SSO integration – Cloudflare Access passes user attributes (email, groups) through headers so the Worker can implement RBAC policies inline.

Section 3: Security, reliability, and the developer experience

By adopting this edge-native architecture, we unlocked critical advantages:

- Inherent security – WAF, DDoS protection, and SSL/TLS ship by default for Nexarq.ai, while internal properties inherit Zero Trust enforcement without a VPN.

- Global reliability – Workers and their storage bindings (D1, R2, KV) are globally distributed, so we get high availability and fault tolerance with minimal DevOps overhead.

- Cohesive developer experience – The Wrangler CLI powers development, local testing, and deployment across both the public site and the CRM. One toolbox, one mental model, higher velocity.

With Cloudflare’s developer platform as our operating system, Nexarq.ai can iterate on customer-facing AI experiences and internal operations with the same playbook—secure by default, lightning fast, and inherently observable.